IPFS Introduction

IPFS nodes leverage a peer-to-peer file sharing protocol and present a decentralized, censorship resistant mechanism for storing large files and data structures off-chain. Shared and randomly disseminated upon upload, files can be referenced by one or more hashes rather than including them in their entirety on the chain. The uploader of a file has the autonomy to implement optional encryption techniques and/or to structure data within a configured directory.

Why a p2p filesystem for off-chain storage?

Also take a look at the Document Store for private file exchange

IPFS, in a similar manner to blockchain, addresses the issue of central server data ownership by dispersing file contents across multiple nodes. This peer-to-peer file sharing technique removes the possibility of censorship or unilateral deletion, because no single party owns or controls the data once it has been uploaded to the network. In fact, if a user chooses to “pin” a file to one or more of their IPFS nodes, the file will always be available for themselves and the entirety of the network to retrieve. The act of pinning, while not strictly necessary, is a strategic tactic that permanently preserves a file so that the network does not run the risk of losing critical blocks as nodes go offline and/or perform database cleanups.

The IPFS user interface provides a clean and simple experience for uploading, retrieving and viewing files. Upon upload, files are carved into 256kb shards, designated with a unique identifying hash and randomly disseminated to various nodes in the network. Files can then be immediately retrieved and inspected by simply referencing the corresponding hash. This combination of distributed computing and content-based addressing (as opposed to location-based addressing) makes IPFS both highly-performant and reliably persistent. As such, IPFS can serve as a trusted data store for high memory files (e.g. images and multi-page contracts) with the blockchain layer needing only a reference to the hash.

Deploying an IPFS Node

You can elect for one of two approaches to provision IPFS Nodes: Kaleido Console UI or Admin API. For users unfamiliar with the Kaleido REST API, the console interface is the recommended happy path. Both approaches will ultimately result in a new IPFS node running within the specified environment.

Via the console

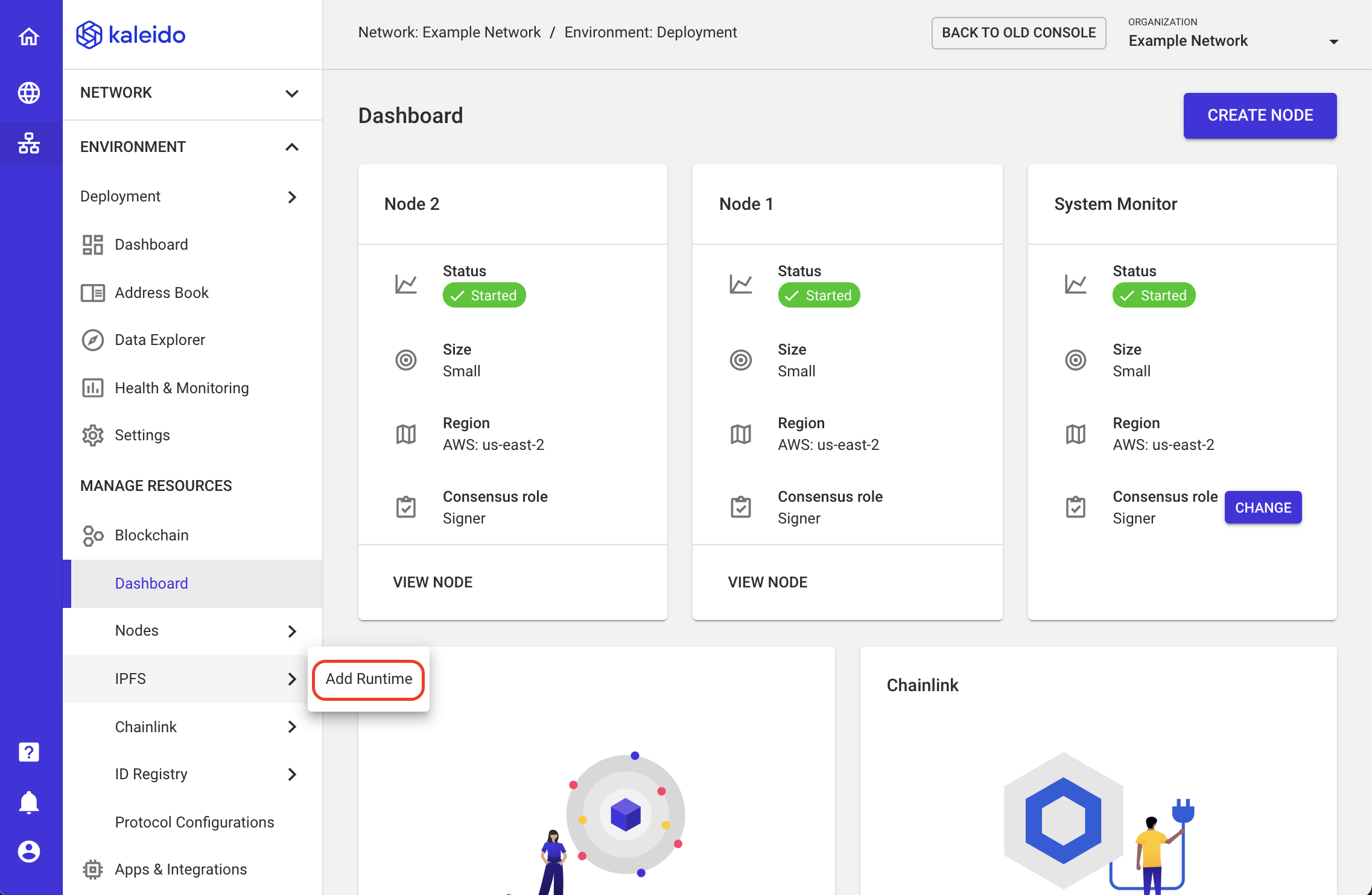

- From within an existing environment dashboard, expand the Blockchain section and hover on IPFS in the left-hand navigation menu. Click Add Runtime.

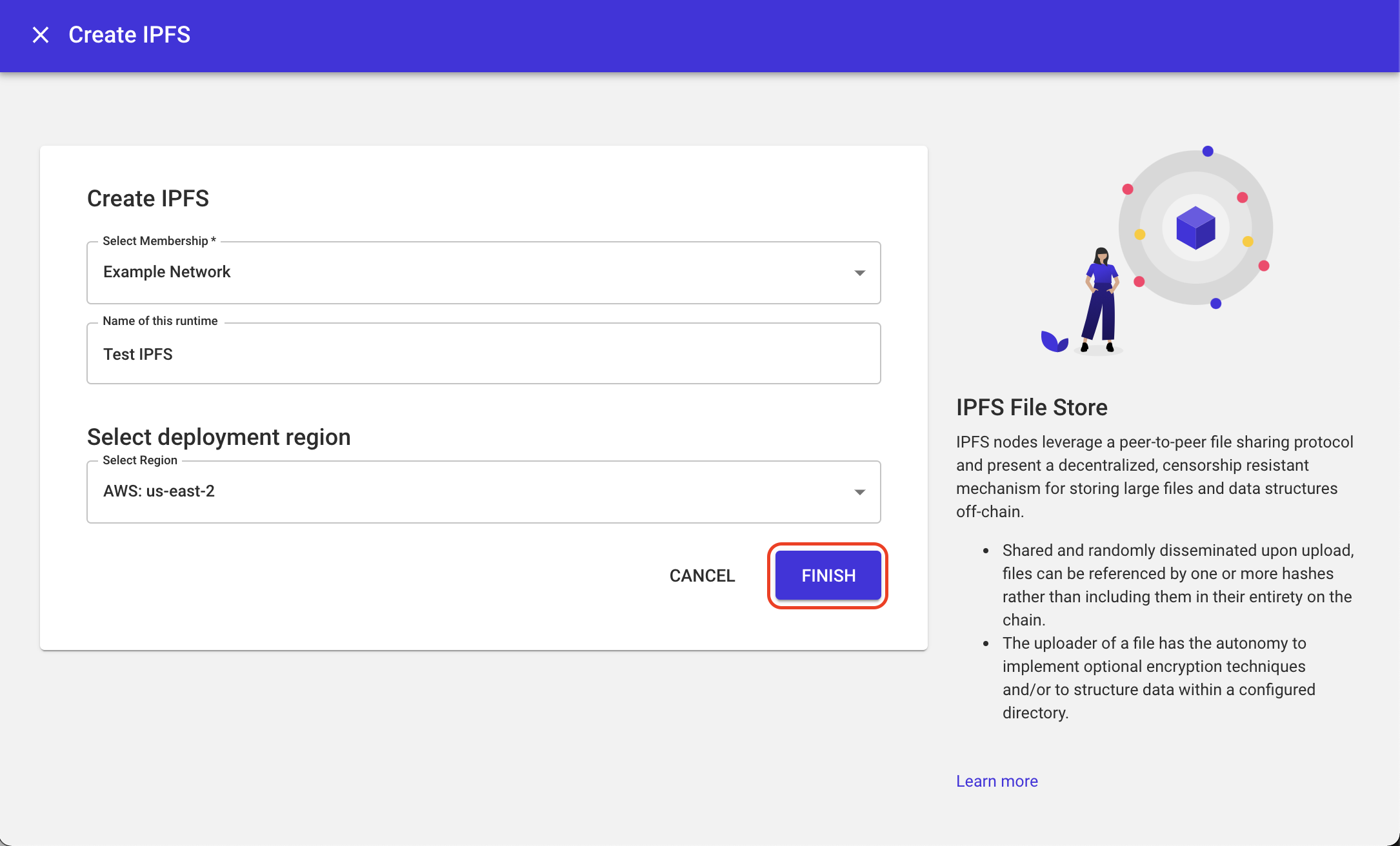

On the runtime configuration screen:

- Select a membership to bind the runtime to.

- Optionally provide a name for your IPFS node, e.g.

IPFSnode1. - Select a deployment region.

- Click FINISH.

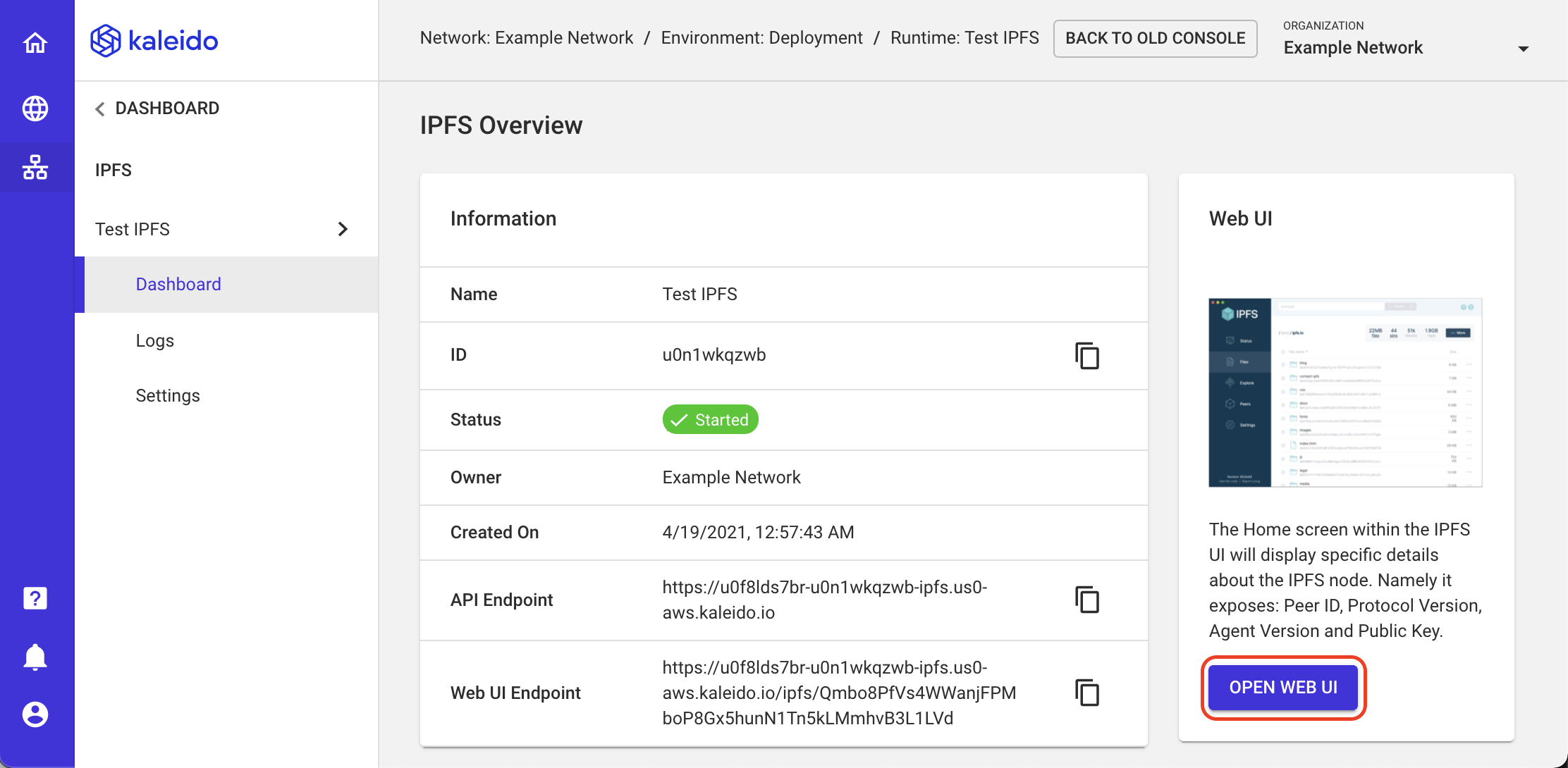

- Once finished provisioning, you'll now have the ability to access the IPFS Web UI from the Kaleido Console. Click OPEN WEB UI to continue.

Via the API

NOTE: The following deployment approach assumes a strong understanding of the Kaleido APIs. Please refer to the Kaleido Resource Model for object relationships, the API 101 topic for sample CRUD operations and api.kaleido.io for detailed descriptions of the various endpoints and routes.

To programmatically create an IPFS node, specify the consortia and environment IDs in the path and

POST to the /services endpoint with a name, the service type and membership ID in the body of

the call. The forthcoming sample commands assume that the following environment variables have been

set as follows:

export APIURL="https://console.kaleido.io/api/v1"

export APIKEY="YOUR_API_KEY"

export HDR_AUTH="Authorization: Bearer $APIKEY"

export HDR_CT="Content-Type: application/json"

If you are targeting an environment outside of the US, make sure to modify your URL accordingly. The

ap qualifier resolves to Sydney, while ko resolves to Seoul:

export APIURL="https://console-eu.kaleido.io/api/v1"

export APIURL="https://console-ap.kaleido.io/api/v1"

export APIURL="https://console-ko.kaleido.io/api/v1"

Use the POST method to provision the service and optionally format the output using jq:

# replace the membership_id placeholder with one of your membership IDs

curl -X POST -H "$HDR_AUTH" -H "$HDR_CT" "$APIURL/consortia/{consortia_id}/environments/{environment_id}/services" -d '{"name":"exampleIPFSnode", "service":"ipfs", "membership_id":"{membership_id}"}' | jq

This will return you a JSON payload containing the service ID. Next, you can call a GET on the

/services endpoint and specify the service ID for your IPFS node. For example:

curl -X GET -H "$HDR_AUTH" -H "$HDR_CT" "$APIURL/consortia/{consortia_id}/environments{environment_id}/services/{service_id}" | jq

This call returns a JSON payload containing the node specific details. Namely it provides the targetable URL for interaction with your IPFS node. For example:

{

"_id": "u0ria6l9rx",

"name": "exampleIPFSnode",

"service": "ipfs",

"membership_id": "u0bvfy5467",

"details": {

"datastore_type": "flatfs"

},

"service_guid": "1878bcdf-a5b5-45a9-b279-5f8bb38d36da",

"service_type": "member",

"state": "started",

"_revision": "1",

"created_at": "2018-09-28T15:17:18.687Z",

"environment_id": "u0iwnhf0ir",

"urls": {

"http": "https://u0kn2j7tub-u0tbrb4lmi-ipfs.kaleido.io"

"webui": "https://u0kn2j7tub-u0tbrb4lmi-ipfs.kaleido.io/ipfs/Qmbo8PfVs4WWanjFPMboP8Gx5hunN1Tn5kLMmhvB3L1LVd"

},

"updated_at": "2018-09-28T15:17:23.184Z"

}

As is the case with a regular node, the ingress to the IPFS node is TLS protected. All calls require the presence of application credentials that have been generated against the same membership ID that provisioned the node.

All environments on release 1.0.36 or later, support badger as datastore to be used with your

IPFS node. To deploy a node with badger as datastore, use

# replace the membership_id placeholder with one of your membership IDs

curl -X POST -H "$HDR_AUTH" -H "$HDR_CT" "$APIURL/consortia/{consortia_id}/environments/{environment_id}/services" -d '{"name":"exampleIPFSnodeWithBadger", "service":"ipfs", "membership_id":"{membership_id}", "details": {"datastore_type":"badgerds"}}' | jq

If no datastore_type is provided, the default datastore of flatfs will be used.

This will return you a JSON payload containing the service ID. Next, you can call a GET on the

/services endpoint and specify the service ID for your IPFS node. For example:

curl -X GET -H "$HDR_AUTH" -H "$HDR_CT" "$APIURL/consortia/{consortia_id}/environments{environment_id}/services/{service_id}" | jq

This call returns a JSON payload containing the node specific details. Namely it provides the targetable URL for interaction with your IPFS node. For example:

{

"_id": "u0ria6l9rx",

"name": "exampleIPFSnodeWithBadger",

"service": "ipfs",

"membership_id": "u0bvfy5467",

"details": {

"datastore_type": "badgerds"

},

"service_guid": "1878bcdf-a5b5-45a9-b279-5f8bb38d36da",

"service_type": "member",

"state": "started",

"_revision": "1",

"created_at": "2018-09-28T15:17:18.687Z",

"environment_id": "u0iwnhf0ir",

"urls": {

"http": "https://u0kn2j7tub-u0tbrb4lmi-ipfs.kaleido.io"

"webui": "https://u0kn2j7tub-u0tbrb4lmi-ipfs.kaleido.io/ipfs/Qmbo8PfVs4WWanjFPMboP8Gx5hunN1Tn5kLMmhvB3L1LVd"

},

"updated_at": "2018-09-28T15:17:23.184Z"

}

Resource Sizing

The following table serves to outline the available resource limitations based on the provisioned IPFS service size.

| Small | Medium | Large | |

|---|---|---|---|

| vCPU | 0.5 | 1 | 2 |

| Memory | 1 GB | 2 GB | 4 GB |